One more wibble about Typst: I used it for the latest Path of Cunning fanzine with @jgd. I would say “we used it” but our workflow tends to be that we both discuss the next thing to do and then I poke the layout engine until it produces the right sort of output.

But my goodness it is so much more pleasant an experience than doing layout in LibreOffice, which was how we did issues 1-5. It’s a combination of small things (can make the table of contents work the way we want it to and update automatically, can build things like the next issue deadline date automatically from the release date) and big things (an image stays where you put it and doesn’t suddenly decide to jump to the opposite column or the previous page just because you changed something later in the document). More generally, because everything except the images is plain text, once a thing is done it is done, so rather than a note to future me saying “click on this, then that, then that” I can just write a wrapper function that will do all those things the way we want them done (and if we ever make a big layout change, like a different header font, I can update that once and it’ll be right everywhere).

And thanks to pandoc we can still accept submissions in LibreOffice, MS Word, etc., and convert them as part of the layout process.

Sounds great. Scripted layouts FTW.

It reminded me of my PDF film festival ticket hack. My film festival habits tend to be “completely ignore it” or “go to literally dozens of films”. The first year that they did online sales with PDF tickets, I discovered that the PDFs were “one ticket per page”, with a smallish proportion of the page containing “actual ticket” and the majority containing “boilerplate text which was repeated on every other page too”.

I was unwilling to rely upon my phone and equally unwilling to waste dozens of pages printing them, so I manually cropped, cut, and pasted tickets in bulk onto a small number of new pages, and printed those. That was such an annoying process that the next year I spent the time figuring out how to automate it.

The PDF layout that they use has remained the same in all the years since, and so I’ve barely had to touch the script in the interim. Each year I book tickets, I just throw the massive multi-page PDF at my script, and it gives me back a PDF with a 2x6 grid of tickets on each page, and I print that instead.

Sharing it below, less because I think other NZIFF-goers will see it here, and more in case the general framework is helpful for anyone with a similar need!

#!/bin/sh

if [ -z "$1" ] || [ "$1" = "-h" ] || [ "$1" = "--help" ]; then

cat <<EOF

Usage:

$(basename "$0") file.pdf [dimensions]

$(basename "$0") - [dimensions]

Process file.pdf to produce a smaller output pdf with the tickets,

arranged in a grid format. The grid defaults to 2x6, but any "XxY"

value may be supplied as the dimensions argument.

file.pdf is expected to be a collection of Ticketek tickets; the

parameters were designed for New Zealand International Film Festival

tickets, but may apply to Ticketek tickets in general.

The file argument may be "-" to read the file from standard input.

EOF

exit 0

fi

# Check dependencies.

aptget=

which evince >/dev/null \

|| aptget="${aptget} evince"

which pdftoppm >/dev/null \

|| aptget="${aptget} poppler-utils"

which montage >/dev/null && which convert >/dev/null \

|| aptget="${aptget} imagemagick"

if [ -n "${aptget}" ]; then

printf "Required utilities not found. Install the following:\n" >&2

printf "sudo apt-get install%s\n" "${aptget}" >&2

exit 1

fi

tmpdir=$(mktemp -d -t ticketek.XXXXXXXXXX)

if [ $? -ne 0 ]; then

printf %s\\n "Unable to create temporary directory." >&2

exit 1

fi

# Dimensions of the resulting grid of tickets.

xy=${2:-2x6}

# Establish the input and output files.

file=$1

if [ "${file}" = "-" ]; then

# The output file will be written to the temporary directory.

pdf="grid-${xy}.pdf"

else

if [ ! -f "${file}" ]; then

printf "No such file: %s\n" "${file}" >&2

exit 1

fi

file=$(readlink -f "${file}")

# Output to a derivative of the original filename, indicating dimensions.

pdf="${file%.pdf}-${xy}.pdf"

fi

# Convert PDF to cropped image files

# Tile images 2x6 per page

# Create PDF of all pages

# Open in evince PDF viewer

################################################################################

cd "${tmpdir}" \

&& pdftoppm -gray -png -W 1080 -H 450 -x 50 -y 340 "${file}" ticket \

&& montage ticket-[0-9]*.png -geometry 1080x450 -tile "${xy}" page.png \

&& convert page*.png "${pdf}" \

&& evince "${pdf}"

It’s weird. Many people clearly think of a PDF as this monolithic Thing that can’t be changed, when in fact it’s more like a container format for chunks of text and image — and they can be extracted again.

Hooray! I’ve recently started communicating the inefficiencies of GUI/WUI based workflows (and workflow documentation) to my management. If a process is to be repeatable, it should be extremely, easily repeatable; to me, that means “automation”.

Adobe’s biggest victory, then. I know I’ve encountered dozens of people who assume that PDF content is immutable. If I had fewer scruples, I would exploit that assumption to great profit, I suppose.

I was afraid that this would go slower than with a GUI program, but it did not. The “Roger tinkers with layout” intervals were shorter, much happier for Roger, and produced repeatable reliable output. It’s great! .

I have to admit that it didn’t occur to me to try to extract images directly from the PDF. I’m just using tools to render and crop each page at known positions, saving the resulting images, and re-assembling them into a new document. I wonder whether accessing the ticket images directly would have remained as stable over so many years as what I actually did? The visual output has always been consistent for my purposes, but I’ve no idea whether the PDF internals have done the same. Something for me to look into the next time I have a need to do something with PDFs, though.

What are your preferred tools for manipulating PDF data?

poppler-utils. In particular pdfimages, which you can tell to dump all the images from a range of pages or from the whole thing. The thing about that versus screenshotting is that you get the original image in the resolution at which they embedded it. However, if they used a transparency mask or various other sorts of manipulation those won’t come out in an obviously reproducible way. (When building monster pawn images for my GURPS Pathfinder game a while back, I ended up looking for the two largest bitmaps on a page and then using a heuristic to see which one was the mask.)

Also the Perl library PDF::API2 which is probably meant mostly for creating PDFs but has some useful tools for mucking about with them.

pdftk is a good general utility.

cpdf (non-commercial use only) has a “strip out images” which I often use before printing something.

And the ultimate interactive tool is good old inkscape; pdfimages does a great job with bitmaps, but if a rulebook has vector images it’ll generally recover them in a clean way. (E.g. so that I can include the actual icon in the mini-rulebook I’m writig.)

Good stuff. The poppler-utils package provided the pdftoppm utility that I used in my script. I was aware of poppler at the time on account of the pdf-tools Emacs package (for reading, searching, annotating PDFs) which uses poppler behind the scenes to do the heavy lifting.

Might be a stretch because I didn’t code it. But I made the chatbot do it… does that count?

I now have a script that searches kleinanzeigen for prices for games I want to sell and calculates an average and the number of offers.

This script is super simplistic and so it lumps sleeping gods in with distant skies. I might refine it. Mostly I want to pick out games that seem to have a good chance selling because there is not an overabundance of offers…

I am just too lazy to do the actual work myself.

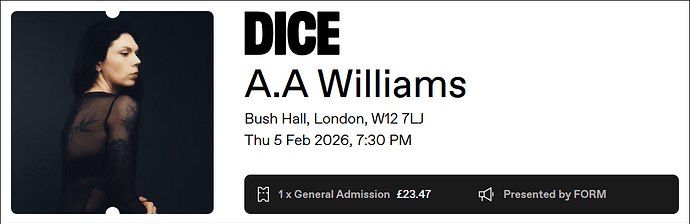

Over the last year or two there seems to have been a shift away from paper tickets to e-tickets for gigs.

It seemed a shame that my scrap-book would have no more stubs added to it so I’ve been creating mock ticket stubs.

I’d been doing this in Paint.NET but it was a bit of a tedious process so today I brushed up my HTML and CSS and wrote a small webpage that lets me generate a ticket stub. Here’s one based on the DICE website/app.

I predominantly use the DICE app for e-ticket purchases as it tends to have the best prices, they have a pretty generous returns policy and they do their best to prevent touts.

I do however have a couple of gigs coming up where the venues have their own app. Guess I’ll need to knock up a couple more webpages.

In the us, paper tickets were well on their way out before Covid. Covid gave everyone an excuse to stop using them entirely. Bit of a shame, for the reasons you mentioned. But it is harder to forget them.

There are some other strange failures though. Last year, we were at a baseball game, in rather posh seats. My wife and daughter went to go see the rest of the stadium, and couldn’t get back to the seats because I had the tickets on my phone, and since we were already in, couldn’t transfer them to her. I had to go up and get them.

I have physical tickets in my scrapbook up to the end of '22 then printed e-tickets for a period after that until they got replaced by apps.

I’ve noticed at some smaller venues they don’t bother scanning the QR/bar-code and will either just wave you through on trust or check your name off a list.

Once I turned up at a gig having bought an e-ticket from a company I’d used several times in the past. The box office was one of those that didn’t scan e-tickets, but they’d not printed the list for this particular company. Bouncer scrutinised my phone for a bit and just waved me through.

I was volunteered to help out another team of (junior) engineers who are banging their heads against a build system that I have some experience in.

Since Friday, I’ve been banging my head against it. It’s not helped by the try-fail-retry time being at best tens of minutes and at worst several hours. It’s also another task on my already long list.

Told myself I’d give it until the end of my office day and put it down. I got frustratingly close before leaving but couldn’t get it working.

Decided once I was home to rebuild everything from scratch and do some reading around the subject. Very quickly stumbled across a potential solution.

Had to wait over four hours to be able to try it out. A little after 10pm I closed my work laptop with everything building successfully. Phew.

slow build systems suck. They do teach the value of desk checking, while fast build systems encourage throwing shit at them to see what breaks.

many years ago, I ported perl to Ultrix. (There was already a port, but it was Ultrix on VAX, not Ultrix on MIPS. I didn’t realize this until I’d already committed to doing it.) Initially, failures were fast, because it was auto conf stuff failing, or not doing the right thing, so the compile would blow up. After a few dozen iterations o that, it was deep in the build that it blew up, after six hours or so, then after 20, then after 40. (AT the end, it was a three day build.) I ended up scripting an unclean script that put back files and fixed modification times so make would ignore stuff I was reasonably sure was right. God, I don’t miss that shit.

The frustrating thing is we know the full rebuild can be done in about 30 minutes. It’s just something on our developer machines that drags it out to several hours. Recently whatever’s been slowing down the builds has also been interrupting them so you have to babysit them.

To the vendor’s credit there’s a 40 page worked example for this esoteric bit of functionality. There are however two mechanisms and rather than provide separate example code files for each, they’ve provided one set of files which merge the two together.

They also completely undersold the importance of whitespace in one of the files I was working with. That lost me half a day.

We’ve had lots of hassle with things like that. Corporate IT keep wanting to add new security monitoring tools, and swear they don’t cause slowdowns, until we demonstrate they’re wrong about that. Their testing is against MS Office usage, not compilations.

The chap who compiles Ever & Anon (the pdf apa which replaced Alarums & Excursions) wrote a bit in last month’s issue about how he does it. Counting pages! Like the animals!

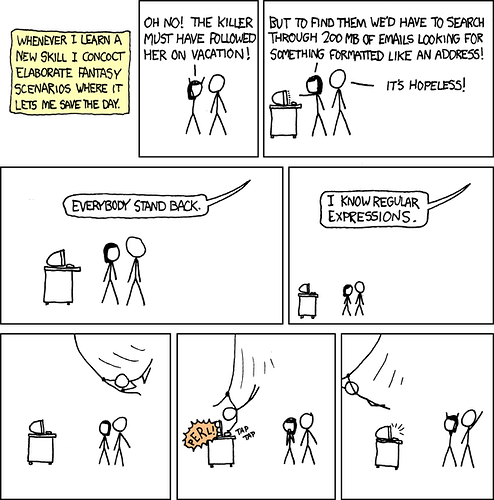

So then more or less this happened.

via

Not quite ready for release, but it’s up and running…

With a bit of sadness did I find out that this years advent of code will only have 12 puzzles. But I guess just like my yoga you tube channel, the organizers are overwhelmed by how huge these events have become and I think in the case of AOC there is also the troubles caused by AI and super competitive … whatever.

I will of course try myself on the puzzles I get. I’ve gratefully used AOC to hone my programming skills and remind me why I studied this stuff and it has brought joy back into my work life in an unexpected way.